Prof. Dr. Thomas Brox (CIBSS-PI), Department for Comupter Science, University of Freiburg

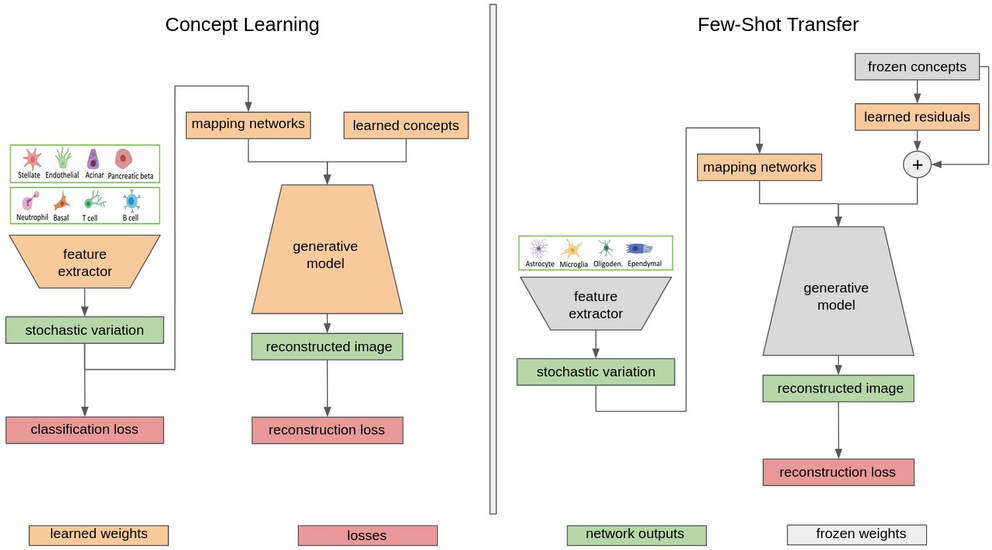

Humans are capable of learning new tasks by adapting their knowledge and looking at few or even a single example. Such a capability is highly desirable for neural network since it ensures flexibility and data efficiency. Recent findings indicate that the intrinsic dimension of image datasets is relatively low [Pope et al . 2021]. Accordingly, we can split the representation of images into high-level concepts and stochastic variation. Since intrinsic concepts are shared across samples from different classes, we extract them from a large base dataset. Then, we train a generative model to interpret them properly conditioned on stochastic variation to reconstruct the input images. Adapting to news tasks/ classes consists on catching the eventually missing concepts and alter the magnitudes to reconstruct the available few samples. The strong data augmentation and low number of trainable parameters prevent from overfitting to the training samples. In general, we expect this method to not only solve the few-shot-learning problem but also allow also for detecting out-of-distribution samples and generalize to continual learning scenarios.